You might want to start with the first Liberation Technology post over on Punk Pedagogy!

When Grace Hopper invented the first compiler — turning Roman characters typed on a Teletype machine into basic, digital computer instructions — it was a bold move toward making computation accessible to more humans than the tiny number of mathematicians who had both access to — and the talent for — writing the raw assembly code that described a computing process.

She was using a recent, powerful human interface innovation, the Teletype, which was, itself, an adaptation of telegraph technology, but drawing from another recent information technology, the Linotype, used for laying out newspaper pages in lead type.

The Linotype was an adaptation of the typewriter interface to the Gutenberg press, itself derived from woodblock presses that used single-purpose plates combined with design principles developed five centuries earlier in Chinese presses.

In each case, the creators of a new medium found ways to integrate cutting-edge human interface with the creative powers of an earlier medium.

And yet, in the year 2021, what we do consistently in programming is emulate a teletype. We use lines of code. In some languages, there’s no particular meaning to a line break other than giving our eyes a rest. Some use color to distinguish types of commands or data, but few use anything other than a one-dimensional list of Roman alphanumeric characters and punctuation as a means of programming. To reduce the confusion, some IDEs color-coded text by type; rarely, they allow a part of the Unicode set of characters outside of those used by a Teletype of 1940. Capitalization conventions exist in many contradictory forms without an IDE even noticing, leading to errors, arguments, and public shaming, rather than a shared system of symbols.

The front end of the Liberation Technology IDE has to be something that a new user can freely play with, implementing and debugging ideas, not chasing down syntax errors. When a creator makes a mistake, it must be an error of their design or their logic, not in their ability to accurately reproduce the rigid orthography required by a compiler, that requires their consideration.

I’ve seen a few ways of addressing such issues: HyperTalk’s error-tolerant, flexibility; Scratch’s (and its descendant, Blockly’s) block model; Tina Quach’s fault tolerant, speech-interpreting Codi; and MaxMSP (and other, usually musically oriented) patch model. I know what I want, but this is design, not art. It has to satisfy the needs of an entire audience, and in this case that intended audience is quite diverse.

So let’s talk about what a human-computer interface does first, and then let’s talk about what these particular interfaces do, and then maybe what some hypothetical future interfaces might look like.

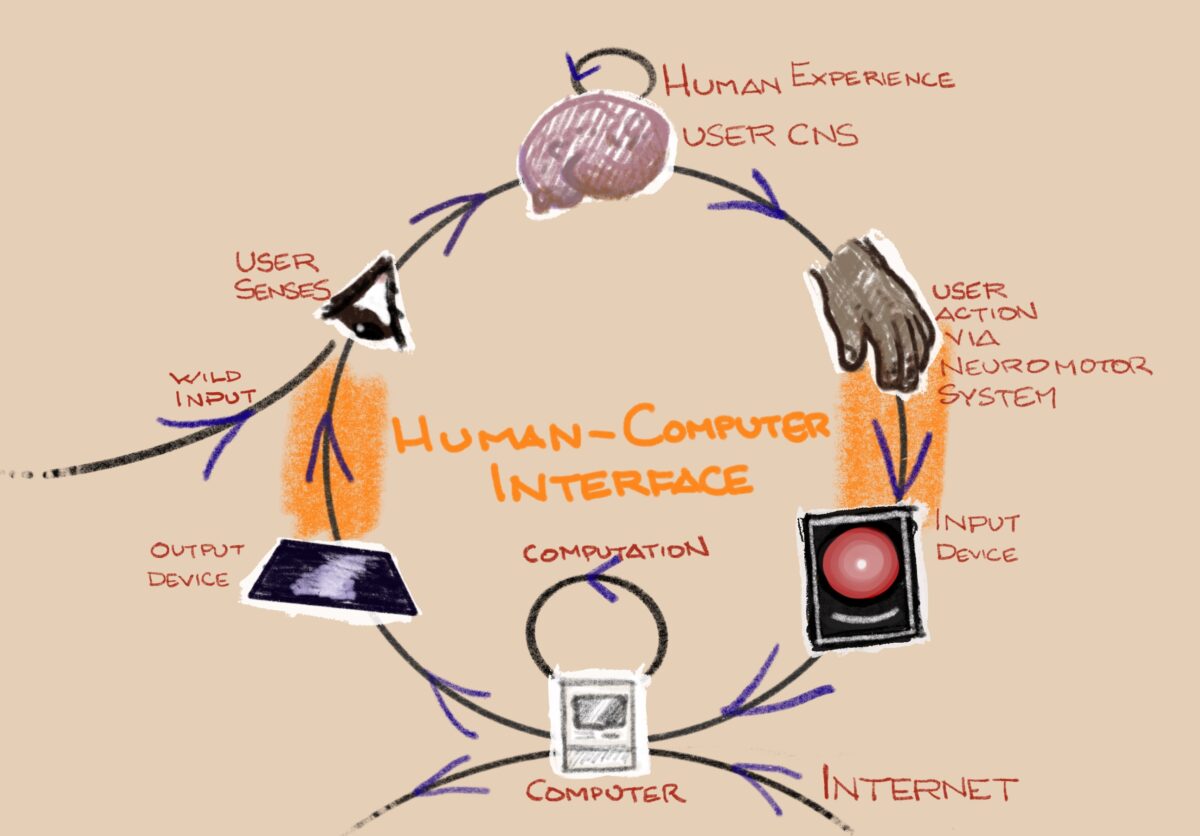

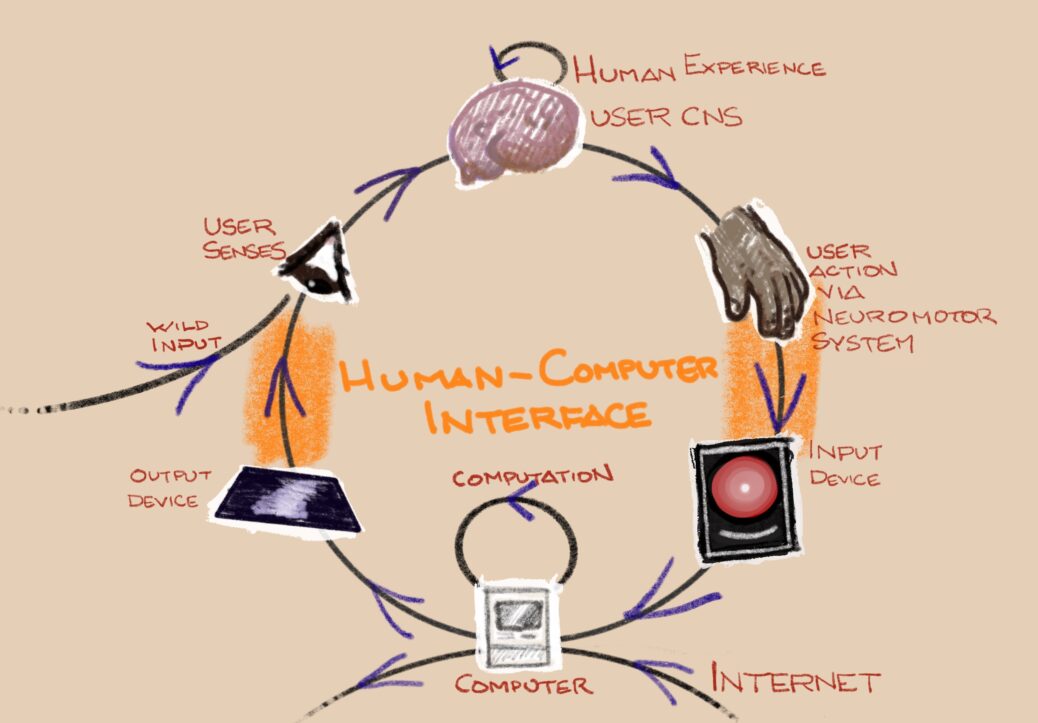

A human-computer interface is a system that connects the nervous system of a user (in this case, a creator) to the capabilities of the machine in a feedback loop:

We can start with the machine taking input: The machine takes in information from the user, uses that information according to its programming, then sends feedback information through the interface to the user’s peripheral nervous system — through the eyes, if it uses a screen or lights; or through touch-sensitive nerves for haptic feedback; or through the ears with speakers.

These inputs are connected to the user’s central nervous and limbic systems and it uses those inputs to alter the user’s perception.

That perception informs them of how to send signals to their motor neurons, which then move their muscles to reorient parts of their body, from their eyes to fingers to sweat glands.

The machine is able to perceive these changes, usually through the mechanical manipulation of potentiometers or switches, but sometimes also through galvanic sensors on the skin and, increasingly, cameras, microphones, and other, more ambiently-aware systems.

The machine then takes this input and the feedback cycle repeats.

Because this is a feedback system, you can “start” at any point; it’s an arbitrary design decision made according to the needs and assumptions of the design, but it is really important to know where to establish the initial conditions to that loop. For instance, where does the interaction loop with your phone begin? Probably when you first powered it on. It says “Hello.” And then it’s a process of years of your phone and you asking each other questions and giving each other answers. Just because it’s in your pocket doesn’t mean it’s out of the loop; you give it motion and location data; you feel it in your pocket when someone texts you or when you’re supposed to take out the garbage. You know how sometimes you put it in the wrong pocket or on the table, and then your usual pocket feels empty? That’s because you’ve left that feedback loop.

In short, your phone is a highly integrated part of your nervous system. It’s sometimes called a metacortex, even.

Programming: Telling the computer how to enter the feedback loop

For comparison, when you sit down to learn to program, what do you see? The Arduino IDE has a really interesting feature: it starts with two functions onscreen:

void setup() {

// put your setup code here, to run once:

}

…and:

void loop() {

// put your main code here, to run repeatedly:

}

While C syntax is a real problem — a 50 year set of conventions that perfectly discourage most potential programmers — this particular choice of entry point is really smart. It tells a new programmer a couple of really important things:

- Where do I put my code that I want to run as soon as I turn on my creation?

- Where do I put my code that I want to run the whole time it’s on?

- What a function looks like. That is, this code that shows when you select “New” from the File menu implicitly demonstrates how if(){} works, and you’re gonna need if almost immediately.

Because what’s happening here is that the first move in that loop is being made by the design of the Arduino IDE. It’s using its screen to show the user’s eyes shapes that the user’s brain assembles into words that tell the user’s brain what structure to use next and how to use your hands to operate the keyboard and mouse to make things happen. Then, when you see things change onscreen, the loop continues.

Once you’ve written a few programs, you probably remember to delete the comments or insert your own. Eventually, you learn where global variables go (it’s before startup()! It might be good to express that fact when you open your first blank file!) But I’ve seen enormous, complex pieces of signal generation code that still include these comments. Hundreds of lines of code interpreting code from capacitive sensors, building waveforms, speaking MIDI, and error checking itself along the way. All, surrounded by the redundant, unnecessary //put your main code here, to run repeatedly:. The initial comments clearly don’t get in the way of experienced programmers.

I think it would be good that startup file demonstrated some other, more optional basics, particularly analogRead(), digitalRead(), analogWrite(), DigitalWrite(), and example global variables and an if() statement. But at that point, we’re adding yet another patch to a language design that’s almost twice as old as the average human. We’re not going to solve this problem with additional duct tape.

Let’s look at some other ways that people have interacted with this programming interaction feedback loop.

HyperTalk: zooming from object to script to C

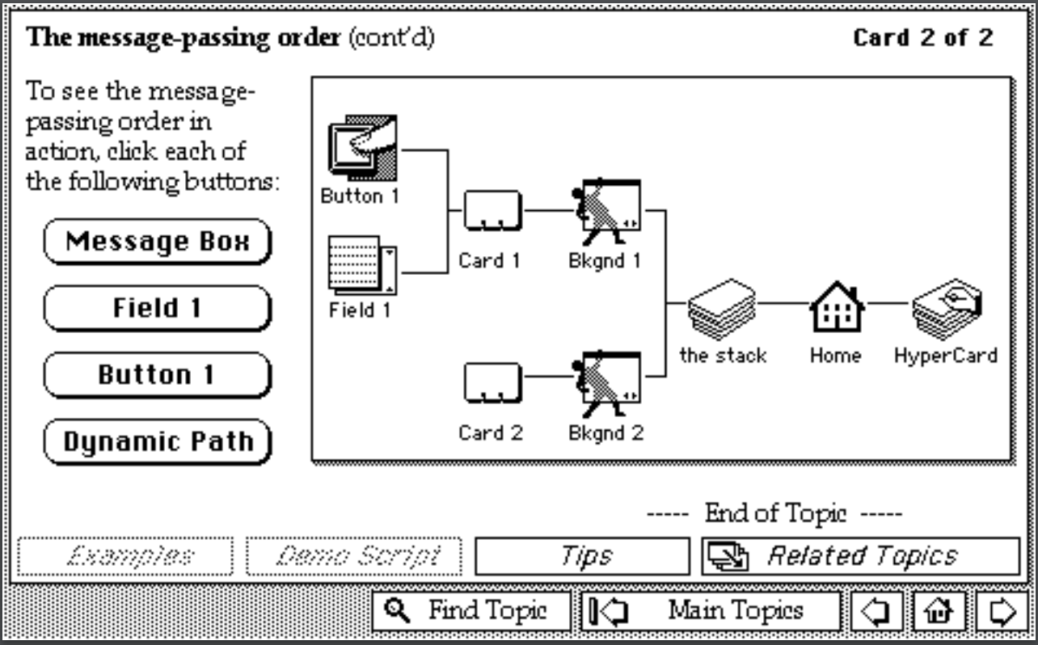

HyperTalk was the programming language behind HyperCard — the development environment included with every Mac from the late 80s through mid-90s. It was the first “scripting language” and featured the little gloved Mickey Mouse hand you now know from Web browsers.

When your opened up HyperCard, you probably did it through a “Stack”, an executable database containing both code and data. You were probably opening one of the included, useful stacks, like the Rolodex that was where most users experienced all they ever would.

But eventually, many users would realized that they needed an additional field. Perhaps they needed to add an additional phone number field for the fax machine.

So they would add phone number field. They’d draw it as a rectangle into the background card, and then it would work.

And maybe you’d double click a button while you were editing the fields, and see that the “New Card” button had a little script in it that said something like,

on mouseUp

new card after this card

end mouseUp

And it would start to dawn on you that you could tell it to do stuff. “What if I want to make sure I’m not making a duplicate card?” you might think. So then you could look at how to do logic when you entered text into a field.

In many cases, it wasn’t long before that Rolodex was talking with your calendar stack so you could say who your phone call was with. And the stack was dialing the phone for you.

The interaction loop started when you started using built-in software that allowed you — invited you — to open up the hood and see how each button, field, card, stack, and sound effect worked. You’d click a button that did something clever, and you’d open it, and the answer was right there. It just showed how the programmer did it. And then you knew. Or you didn’t understand it, but you’d copy and paste it and change a thing or two, and then it worked. Or it didn’t and you’d have to debug, learning the whole time. Maybe you’d use a book, or maybe you’d learn something from your companions on a HyperCard listserv.

Eventually, you’d hit one of HyperCard’s pretty serious limits and start hunting for an external plugin — an XCMD, an eXternal CoMmanD — to extend it, written in C. You’d install the external into your stack, then go about making your thing.

And sometimes, just sometimes you’d learn how to write that XCMD yourself, applying C to a high-level language, extending it for your own use and that of others. There was even a stack called CompileIt! that helped you write XCMD that linked to the Macintosh OS’s APIs, allowing you OS-level programming.

As HyperCard exists, one’s objects (e.g. buttons, fields) can call functions from a card, which can call functions from the background it shares with other cards, which can call functions from the stack shared by all the cards and backgrounds, which can call external functions installed into the stack by the stack author. Liberation Technology builds on this model of “zooming in” on objects to see a lower level of programming abstraction inside.

HyperCard never intended to dig all the way down to low-level programming, but it provided the framework for external, compiled executable code that could be called from within. Liberation Technology intends to fully connect the creative processes from high level authoring to scripting to high performance coding.

However, HyperTalk’s predecessor, SmallTalk, did. Its intended function was to be the operating system. You could always open something up and change how it work. Anything. Sadly, while it’s a beloved programming environment by its enthusiasts, the OS built around it was trapped inside the experimental XEROX PARC project and never became an affordable computer. There was a movement inside Apple when to make HyperCard the native language of the Macintosh, but its power to show the user how it works interfered with the then-nascent idea of software intellectual property. I think it’s questionable as to whether the SmallTalk model can exist alongside patented software, or, indeed consumerism.

Scratch: doing away with syntax errors

Scratch uses a “block” model of programming to model indented code, where the curly braces in if(){} are part of a virtual object and the parentheses are fields that are the particular shape of a logical condition, which only fits if it’s syntactically appropriate, like a triangle block into a triangle hole. You can’t delete the second curly brace or indent it incorrectly because it’s just the other side of the if object. Other objects — other logical structures — are held within it or encapsulate it by wrapping around it, or they operate independently in another part of the code altogether.

That code typically works inside the stage (the environment) containing an actor (an object containing code) that probably has a sprite (a representation that you can see on the stage) that has its own code. Actors are intended to act as agents, running their own code and interacting by touching objects or colors, by calculating the direction of something else to their own center, and generally acting like characters and environmental features in a video game.

What this does at its best is assure that any code you write (build?) will run. It will do something. And that means that you’re debugging your ideas, your algorithms, your processes, rather than hunting for syntax errors.

Additionally, you can see the interactions and where they go wrong. You will often know that the problem is within a certain actor because you know something goes wrong when it touches another actor. So you know to look in those two actors first to figure out if the error is within their code.

A really important aspect of Scratch is that, on the Scratch website, you can look inside any Scratch program you find to see how the creator did whatever it is the program does. You can find solutions to problems by copying their code, find new inspiration and understanding, and improve on other people’s work.

Scratch, however, assumes that the best way to interact with a computer is by writing concatenated text, and that you just need help. It works to reduce typos by typing the text for you as you select from a palette. It’s a great way to get started, but it becomes cumbersome and illegible when a program starts to get complex.

It uses the 2D nature of a computer screen to allow you to organize chunks of code as you see fit, but the arrangement is stylistic, not functional. It makes no difference how close or in what direction pieces of code are; or, indeed if those chunks of code even work. One will often find non-running examples alongside working code, as well as broken snippets that have been removed from the running code during the debugging process and have never been tidied up. And it often looks just like the working, running code beside it.

But the biggest issue I’ve noticed when teenagers with Scratch is that it announces, asserts, demands that Scratch is For Kids. That gives it both an entry into the creator-computer feedback loop, but also insists on an exit point.

When a small child of, say, 8 comes across Scratch, it feels much like LEGO (who have a partnership with Scratch), where you can assemble things out of raw parts within creative constraints. It works! Hooray! They start learning programming concepts right away! Kids get into systems deep, and they’ll dig into Scratch like it’s Minecraft.

But if there’s one thing kids hate, it’s getting stuck using things that are for kids. They’ll hit its limits, and kids always want to use real tools. If you want a kid to learn how to use a tool, you give one that’s sized for their hand, not a dull knife and a soft hammer to keep them safe. You give them small tools and teach them how they work, and you teach them how to recover from cutting themselves or hitting themselves on the thumb.

Scratch says firmly that, if you want to make a self-running program — one that runs on your phone or on a robot of your own design — that it is time to stop using Scratch.

To be sure, Scratch’s basic engine is being used in projects like Blockly that convert Scratch constructions to other forms of code, which links between the two. But those projects have been begun and been abandoned often because the developers of those environments are not their users. Eric Raymond points out in The Cathedral and The Bazaar that developers improving their own tools, and the tools being built by people who use them, is critical to the long term health of an Open Source project.

Furthermore, Scratch and its descendant environments make the assumption that the fundamental form of code is in a one-dimensional list of characters. Even if we accept that this is a reasonable way for computer code to look, there’s no reason for a programming front end to follow this convention. There’s nothing inherent about a flow of logic that requires the particular form. It’s a matter of conceptual backward compatibility, not a matter of wisely designed interaction.

Max, Nord, and Axoloti: patch the planet

In the music world, a popular metaphor for signal flow is the “patch” — a representation of wires, like one that runs from a guitar to a pedal to an amplifier.

Wires are a pretty solid metaphor: waves of changing voltage come out of one system — the pickups on an electric guitar — then flows into another — the pedal. The pedal changes the shape of the waves, generating a signal from the incoming sound and outputting it to a jack just like the input jack. Then, the cable runs from the pedal to the amplifier, which gives the signal enough power to move a speaker cone to generate sound.

What’s particularly neat about this metaphor is that the pedal can do anything a pedal designer is interested in. You can plug a guitar into it, or a microphone, or another pedal. Some pedals do much more than alter the shape of the waveforms. Some play a controllable echo. Others synchronize other instruments to the same rhythm or alter lighting according to the sound being produced.

This metaphor is taken to its extreme in modular sound synthesis, where there needs to be no initial input of sound. Signals are generated, processed, and reproduced electronically. By plugging the output of one module into another, we can generate a vast, extremely complex signal system.

Such systems have been reproduced in digital form since the 1990s, but Max and the Nord Modular began treating the patches as a computer program, rather than a computer simulation of an analog signal system. Those systems are fairly prohibitive in cost, but have inspired many free and low-cost alternatives, like Pure Data and Axoloti.

To program an Axoloti synthesizer development board, the creator arranges modules and connects them by “wires”, color coded for their sample rate. To take input from an external MIDI, one runs a wire from a MIDI module’s note output to the pitch input on an oscillator, and the gate output to an amplifier module that also takes in the oscillator output.

You can see, in that paragraph, why it is better to draw the idea than to write it out in a list of characters.

Codi: a Coding Agent

Tina Quach introduced a program called Codi in her paper, Agent-based Programming Interfaces for Children: Supporting Blind Children in Creative Computing through Conversation. Codi is a speech-based code editor that listens to speech instructions and gives back speech feedback for existing coding structures. Because parentheses, brackets, carats, and braces are a particular typographical convention that does not follow any particular speech pattern, Quach had to invent new descriptive structures for programming.

When the space key is pressed, repeat the following three times. Play the hi na tabla sound. Wait 0.25 seconds. Play the hi tun tabla sound. Wait 0.25 seconds. That’s it.

The “That’s it.” Command tells Codi that this is the end of a loop. “Repeat the following three times” tells the loop how many times to do it, of course, but the most interesting thing to me is that it starts with “When the space key is pressed.”

Codi starts a behavior at an event. In this case, it’s an event that comes from an input device, but there’s no reason it couldn’t come from an internal process.

In this regard, it’s a lot like a patcher environment, where the model is that signal flows through the program, starting with an event that precipitates other events.

Contrast that with this pseudocode:

keyboard.listen;

if (keyboard.listen(keyPressed) = " "){

for (i=0, i=3, i=i+1){

sound.play (“hi na tabla”);

delay (250);

sound.play (“hi tun tabla”)

delay (250);

{

}

In this C-like pseudocode, we’re looking around to see what is true. We’re not starting with an event; the program is ignorant of what we care about, so we have to tell it to look at what keys are being pressed by polling all the keys, then implicitly ignore all the keys that aren’t the one we’re looking at, then tell it how to count to three.

(Did you catch the syntax error in that pseudocode?)

I think event triggering — perhaps constructing processes out of, well, processes rather than objects — might really empower new and casual creators, drawing on our common perception of events in the rest of the world.

We don’t check every object in the room to see which one’s we’re interacting with. We interact with the ones that stimulate our sensory system: the ones we see, feel, hear, taste, and smell.

OK, yes, you’re right: there aren’t a lot of human-computer interfaces that use taste or smell.

Treating Programming as a Regular Human-Computer Interface

By using the principles and techniques of UX, from Universal Design to iterative user testing, I think we can change our relationship as a society to our programmed environment — one that is becoming as pervasive as sidewalk curbs. None of us have been able to benefit yet from a programming interface design parallel to the curb cut.

Grace Hopper famously said, “It’s much easier for most people to write an English statement than to use symbols.” That priority has only become more critical as programming has become the provice of a scribe class, rather than becoming a quotidian activity as promised at the dawn of the Personal Computer revolution. The result has been that we purchase computers that are programmed to do what the programmers’ employer wants them to do within the feedback loop, not what the user wants.

There are real questions to be addressed in the Liberation Technology interface design process. How to we use Universal Design principles to help the most experienced creators — dedicated and professional programmers — benefit by a design centering on the experience of those who have the fewest advantages — kids, the dyslexic, the neurodivergent, the blind? I feel pretty strongly that these questions are the doorways to a new kind of sociotechnological experience.